-

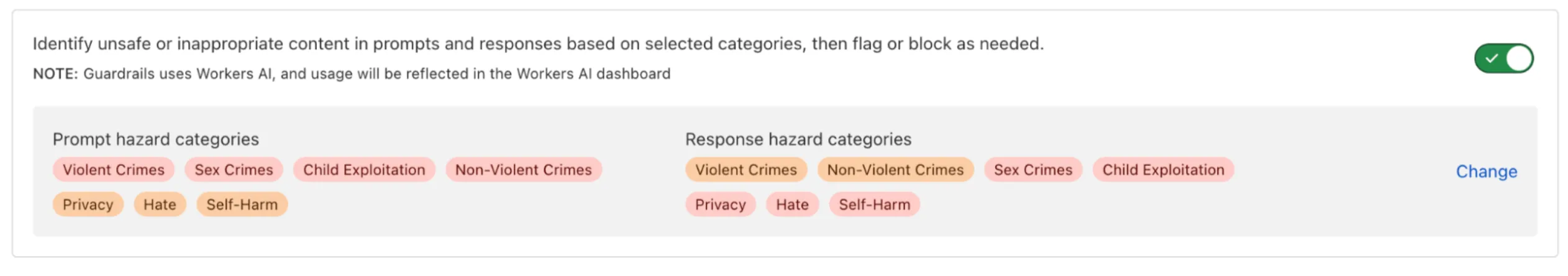

AI Gateway now includes Guardrails, to help you monitor your AI apps for harmful or inappropriate content and deploy safely.

Within the AI Gateway settings, you can configure:

- Guardrails: Enable or disable content moderation as needed.

- Evaluation scope: Select whether to moderate user prompts, model responses, or both.

- Hazard categories: Specify which categories to monitor and determine whether detected inappropriate content should be blocked or flagged.

Learn more in the blog ↗ or our documentation.

-

We've updated the Workers AI to support JSON mode, enabling applications to request a structured output response when interacting with AI models.

-

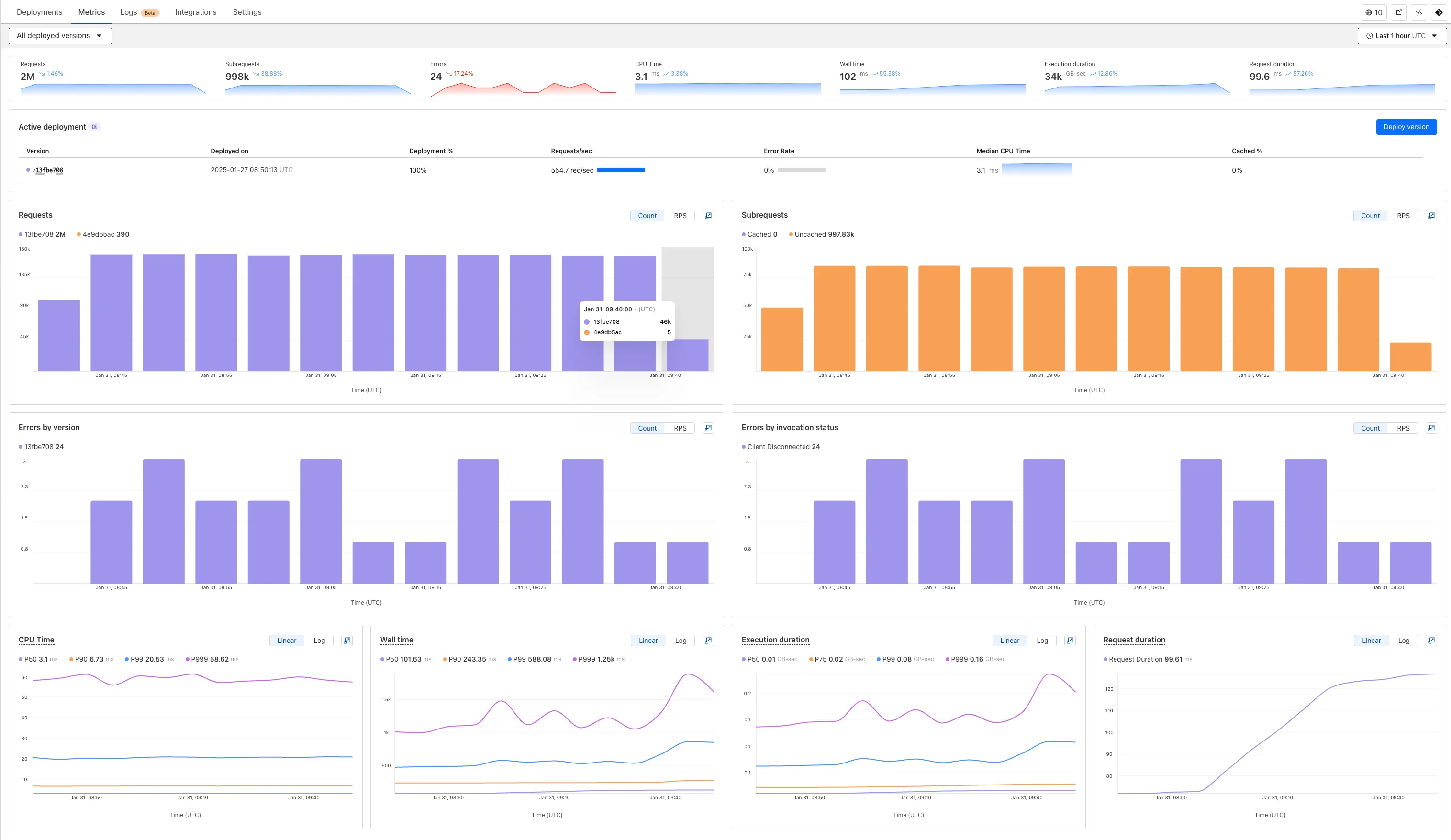

Workflows now supports up to 4,500 concurrent (running) instances, up from the previous limit of 100. This limit will continue to increase during the Workflows open beta. This increase applies to all users on the Workers Paid plan, and takes effect immediately.

Review the Workflows limits documentation and/or dive into the get started guide to start building on Workflows.

-

We've released the agents-sdk ↗, a package and set of tools that help you build and ship AI Agents.

You can get up and running with a chat-based AI Agent ↗ (and deploy it to Workers) that uses the

agents-sdk, tool calling, and state syncing with a React-based front-end by running the following command:Terminal window npm create cloudflare@latest agents-starter -- --template="cloudflare/agents-starter"# open up README.md and follow the instructionsYou can also add an Agent to any existing Workers application by installing the

agents-sdkpackage directlyTerminal window npm i agents-sdk... and then define your first Agent:

import { Agent } from 'agents-sdk';export class YourAgent extends Agent<Env> {// Build it out// Access state on this.state or query the Agent's database via this.sql// Handle WebSocket events with onConnect and onMessage// Run tasks on a schedule with this.schedule// Call AI models// ... and/or call other Agents.}Head over to the Agents documentation to learn more about the

agents-sdk, the SDK APIs, as well as how to test and deploying agents to production.

-

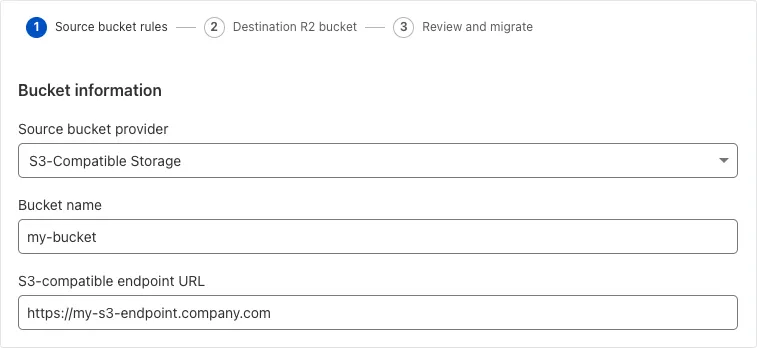

Super Slurper can now migrate data from any S3-compatible object storage provider to Cloudflare R2. This includes transfers from services like MinIO, Wasabi, Backblaze B2, and DigitalOcean Spaces.

For more information on Super Slurper and how to migrate data from your existing S3-compatible storage buckets to R2, refer to our documentation.

-

You can now interact with the Images API directly in your Worker.

This allows more fine-grained control over transformation request flows and cache behavior. For example, you can resize, manipulate, and overlay images without requiring them to be accessible through a URL.

The Images binding can be configured in the Cloudflare dashboard for your Worker or in the

wrangler.tomlfile in your project's directory:[images]binding = "IMAGES" # i.e. available in your Worker on env.IMAGESWithin your Worker code, you can interact with this binding by using

env.IMAGES.Here's how you can rotate, resize, and blur an image, then output the image as AVIF:

const info = await env.IMAGES.info(stream);// stream contains a valid image, and width/height is available on the info objectconst response = (await env.IMAGES.input(stream).transform({ rotate: 90 }).transform({ width: 128 }).output({ format: "image/avif" })).response();return response;For more information, refer to Images Bindings.

-

We've updated the Workers AI text generation models to include context windows and limits definitions and changed our APIs to estimate and validate the number of tokens in the input prompt, not the number of characters.

This update allows developers to use larger context windows when interacting with Workers AI models, which can lead to better and more accurate results.

Our catalog page provides more information about each model's supported context window.

-

Previously, you could only configure Zaraz by going to each individual zone under your Cloudflare account. Now, if you’d like to get started with Zaraz or manage your existing configuration, you can navigate to the Tag Management ↗ section on the Cloudflare dashboard – this will make it easier to compare and configure the same settings across multiple zones.

These changes will not alter any existing configuration or entitlements for zones you already have Zaraz enabled on. If you’d like to edit existing configurations, you can go to the Tag Setup ↗ section of the dashboard, and select the zone you'd like to edit.

-

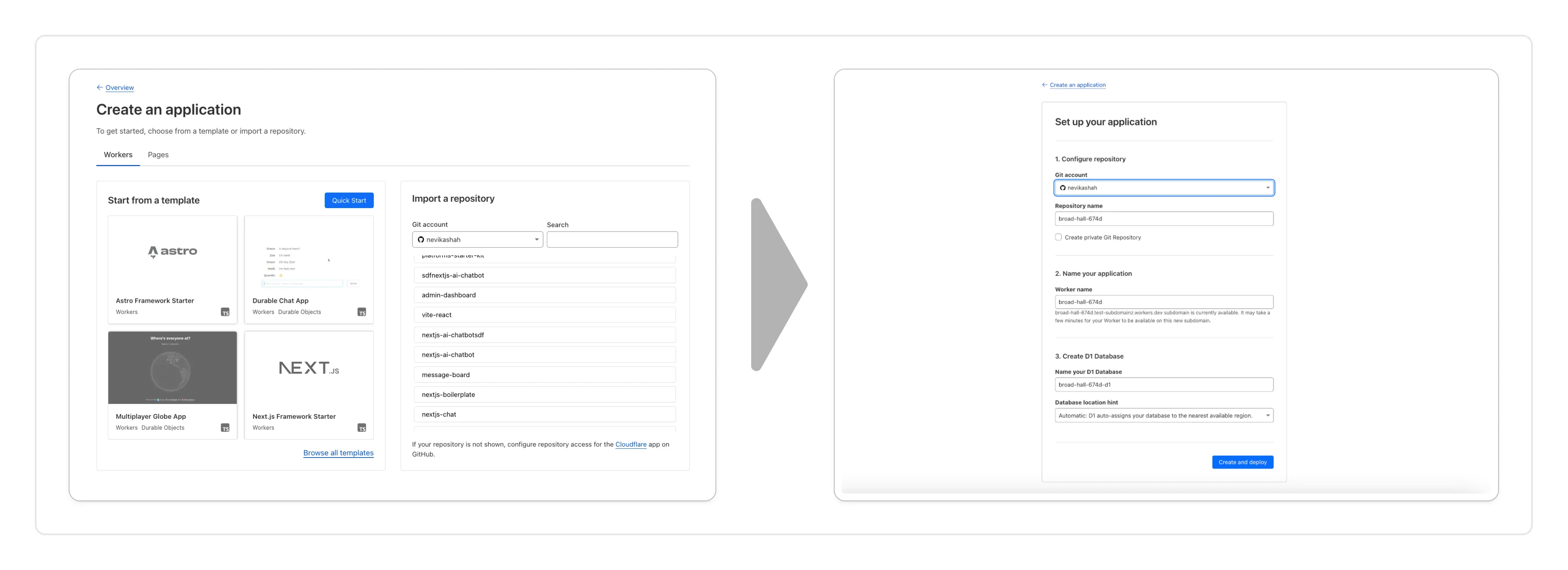

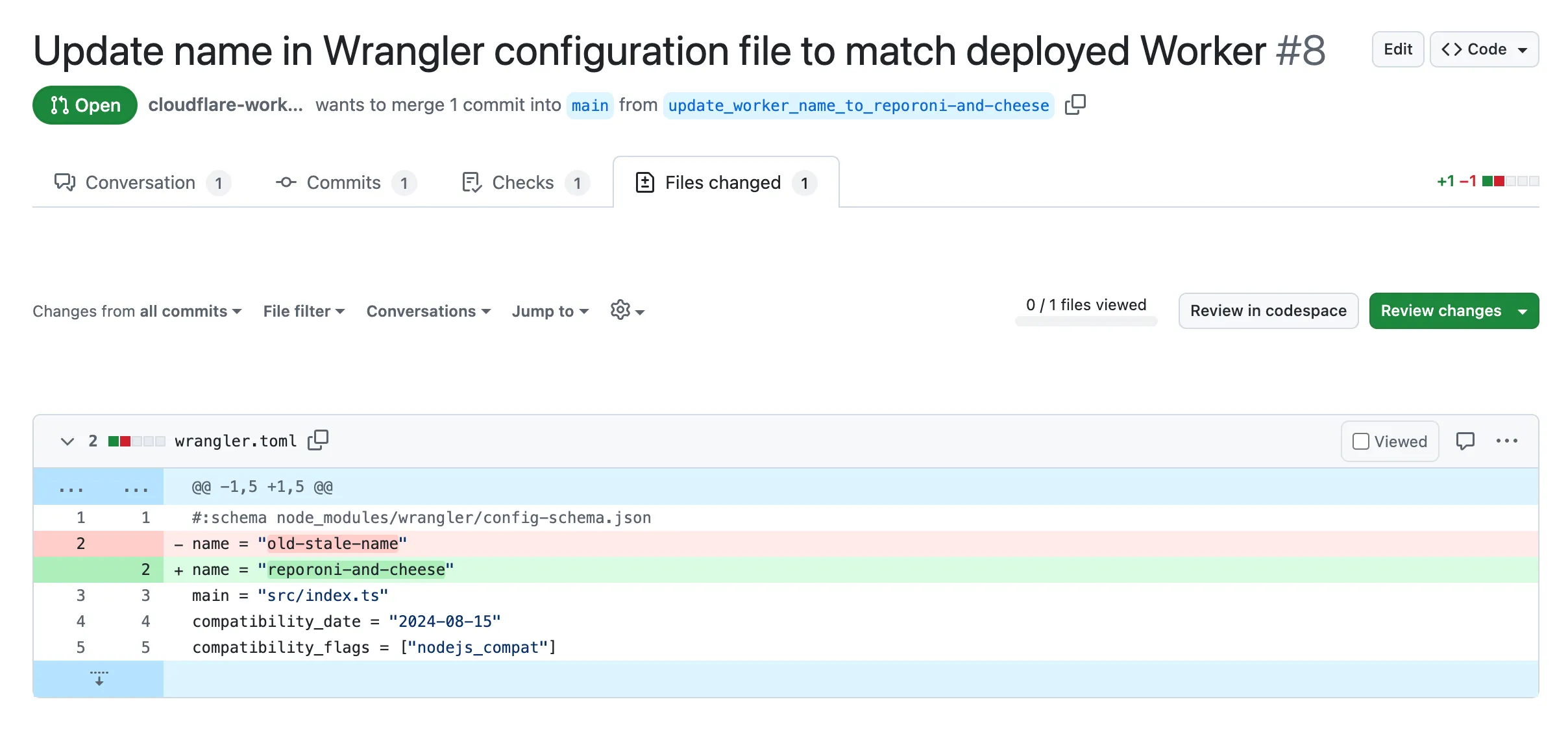

Small misconfigurations shouldn’t break your deployments. Cloudflare is introducing automatic error detection and fixes in Workers Builds, identifying common issues in your wrangler.toml or wrangler.jsonc and proactively offering fixes, so you spend less time debugging and more time shipping.

Here's how it works:

- Before running your build, Cloudflare checks your Worker's Wrangler configuration file (wrangler.toml or wrangler.jsonc) for common errors.

- Once you submit a build, if Cloudflare finds an error it can fix, it will submit a pull request to your repository that fixes it.

- Once you merge this pull request, Cloudflare will run another build.

We're starting with fixing name mismatches between your Wrangler file and the Cloudflare dashboard, a top cause of build failures.

This is just the beginning, we want your feedback on what other errors we should catch and fix next. Let us know in the Cloudflare Developers Discord, #workers-and-pages-feature-suggestions ↗.

-

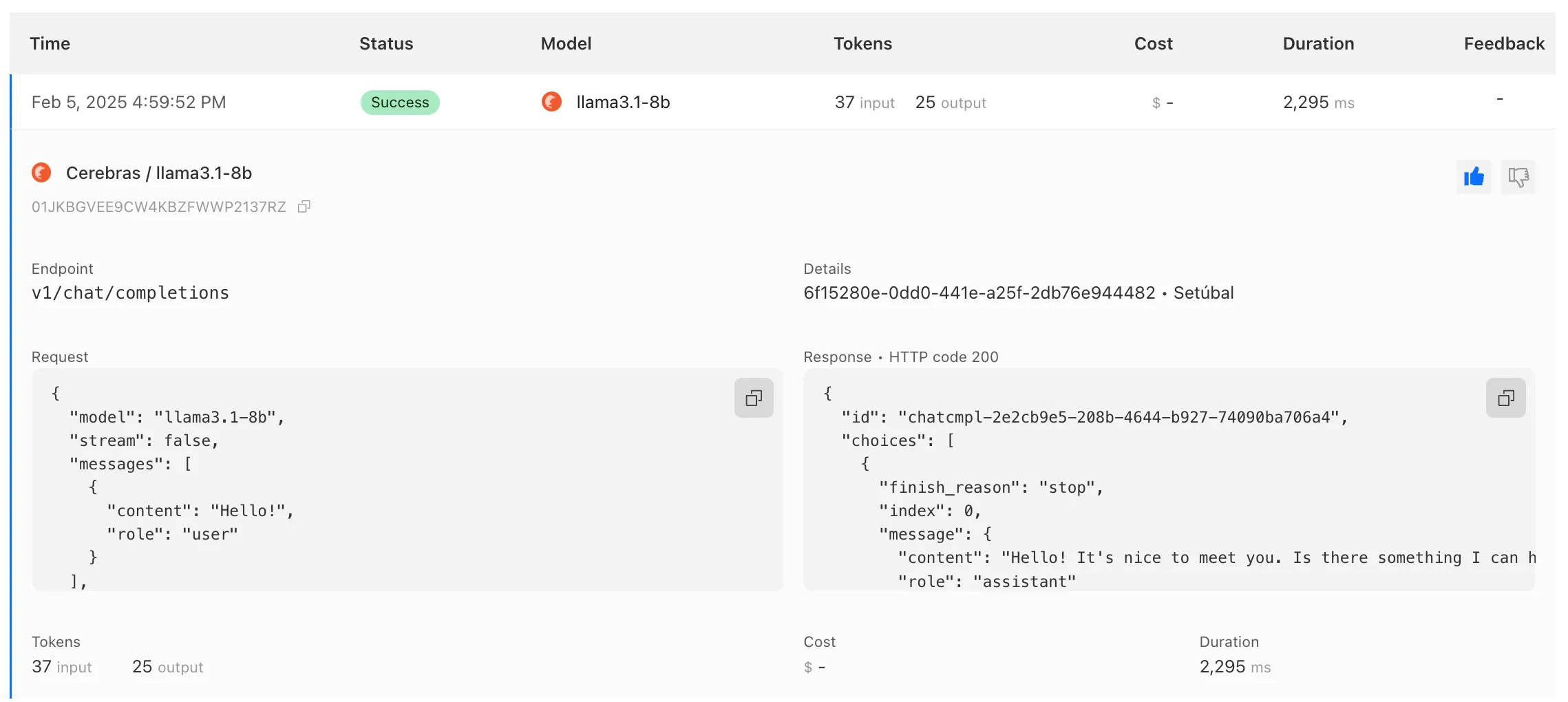

We've updated the Workers AI pricing to include the latest models and how model usage maps to Neurons.

- Each model's core input format(s) (tokens, audio seconds, images, etc) now include mappings to Neurons, making it easier to understand how your included Neuron volume is consumed and how you are charged at scale

- Per-model pricing, instead of the previous bucket approach, allows us to be more flexible on how models are charged based on their size, performance and capabilities. As we optimize each model, we can then pass on savings for that model.

- You will still only pay for what you consume: Workers AI inference is serverless, and not billed by the hour.

Going forward, models will be launched with their associated Neuron costs, and we'll be updating the Workers AI dashboard and API to reflect consumption in both raw units and Neurons. Visit the Workers AI pricing page to learn more about Workers AI pricing.